Matching Messy Texts

The Problems of Free Form Text

One of the joys of language is that there is an infinite variety of ways in which we can express ourselves. Conventions vary. People are likely to run afoul of grammatical and spelling conventions depending on their background, attention to detail and adherence to convention. This expressive variety becomes a disadvantage for a data analyst who wants to analyse text. Urban datasets frequently contain free form language; names, addresses, surveys, regulations, complaints, tweets, posts, newspaper reports, plans, interviews, ordinances etc. When the text is not standardised and restricted, they pose problems for a data analyst who is trying to reduce and distill the information, often ignoring the context and conventions that the gives meaning to the text. The text is then wrangled, standardised or ignored. In this blog, I will show how one might attempt to wrangle text. I also want to highlight the decisions that the analyst makes that severely distort the meaning and introduce errors.

All clean datasets are alike. But each messy dataset is messy in its own way — Prener paraphrasing Wickham paraprhasing Tolstoy

Data & Required Packages

The Worker Adjustment and Retraining Notification Act (WARN) requires employers with 100 or more employees (generally not counting those who have worked less than six months in the last 12 months and those who work an average of less than 20 hours a week) to provide at least 60 calendar days advance written notice of a plant closing and mass lay-off affecting 50 or more employees at a single site of employment. In North Carolina, the Department of Commerce is in charge of collecting and archiving the notices. Research by Cleveland Federal Reserve Bank suggests that these notices are useful bellwethers for economic conditions in the state.

In this blog, I am going to use another business listings dataset from Infogroup called ReferenceUSA to establish additional information about the business that is listed in the WARN database. We are limiting our analysis to Mecklenberg county in North Carolina. ReferenceUSA data for the US can be obtained from UNC library. I obtained the WARN dataset from Cleveland Federal Reserve. Citation for the data is

- Krolikowski, Pawel M. and Kurt G. Lunsford. 2020. “Advance Layoff Notices and Labor Market Forecasting.” Federal Reserve Bank of Cleveland, Working Paper no. 20-03. https://doi.org/10.26509/frbc-wp-202003

In this tutorial, I am going to use stringr, stringdist, postmastr and censusxy packages in addition to other packages in R. postmastr is not yet available on CRAN, but can be installed from github using

remotes::install_github("slu-openGIS/postmastr")

library(tidyverse)

library(skimr)

warn <-

here("tutorials_datasets", "textmatching", "WARN_Mecklenburg.csv") %>%

read_csv()

names(warn)

# [1] "county" "notice_date" "received_date" "effective_date"

# [5] "company" "type" "number_affected" "zipcode_city"

# [9] "warn_no" "address"

Parsing the Addresses

Most geocoders expect an address that is properly standardised (e.g. South - S, Bvld - Boulevard ) and spelling errors corrected. This is important as user entered text about addresses rely on personal and local convention rather than standardisation.

postmastr works by following the precise sequence of the following steps.

Preparation

First there is a need to create a unique ID for unique addresses, so that only unique addresses are parsed.

A quick look at the address column reveals that it has newlines \n in some of the entries. Let’s replace it with a space. We are going to use Regular Expressions to search and replace.

In the following code, I am replacing all the diacritics, e.g.ř, ü, é, è by transliterating to Latin alphabet. This is not strictly necessary but is useful to remember that some place names are in Spanish in the US. Some databases store the diacritics and some don’t. Apologies to speakers of other languages.

It is always a good idea to have one case. We are going to use the upper case.

warn <- warn %>%

mutate(address = str_replace_all(address, "[[:space:]]", " "),

address = stringi::stri_trans_general(address, "Latin-ASCII"),

address = str_remove_all(address, "[[:punct:]]"),

address = str_to_upper(address)

)

library(postmastr)

warn <- pm_identify(warn, var = "address")

warn_min <- pm_prep(warn, var = "address", type ='street') # There do not seem to be any addresses that are based on intersections. So we are using the type=street.

nrow(warn)

# [1] 111

nrow(warn_min)

# [1] 91

You should notice the difference in the number of rows. 20 observations are dropped.

Extract Zipcodes and States

Zipcodes come in two formats. A 5 digit variety and 5-4 digit variety. pm_postal_parse is able to parse both types, though in this instance only 5 digit codes are present.

warn_min <- pm_postal_parse(warn_min)

In this instance only state present in the dataset is NC. First we need to create a dictonary in case NC is spelled out in different ways such as NORTH CAROLINA or NC or N CAROLINA. Fortunately, this dataset only contains NC. If not, use pm_append to add different instances of the state name to the dictionary.

ncDict <- pm_dictionary(locale = "us", type = "state", filter = "NC", case = "upper")

ncDict

# # A tibble: 2 × 2

# state.output state.input

# <chr> <chr>

# 1 NC NC

# 2 NC NORTH CAROLINA

(warn_min <- pm_state_parse(warn_min, dict=ncDict))

# # A tibble: 91 × 4

# pm.uid pm.address pm.state pm.zip

# <int> <chr> <chr> <chr>

# 1 1 895 WEST TRADE STREET CHARLOTTE NC 28202

# 2 2 5501 JOSH BIRMINGAHM PKWY CHARLOTTE NC 28208

# 3 3 4800 HANGAR ROAD CHARLOTTE NC 28208

# 4 4 5020 HANGAR ROAD CHARLOTTE NC 28208

# 5 5 4716 YORKMONT ROAD CHARLOTTE NC 28208

# 6 6 5501 JOSH BIRMINGHAM PKWY CHARLOTTE NC 28208

# 7 7 5000 HANGAR ROAD CHARLOTTE NC 28208

# 8 8 100 WEST TRADE STREET CHARLOTTE NC 28202

# 9 9 5501 CARNEGIE BLVD CHARLOTTE NC 28209

# 10 10 2200 REXFORD ROAD CHARLOTTE NC 28211

# # … with 81 more rows

ncCityDict <- pm_dictionary(locale = "us", type = "city", filter = "NC", case = "upper")

(warn_min <- pm_city_parse(warn_min, dictionary = ncCityDict))

# # A tibble: 91 × 5

# pm.uid pm.address pm.city pm.state pm.zip

# <int> <chr> <chr> <chr> <chr>

# 1 1 895 WEST TRADE STREET CHARLOTTE NC 28202

# 2 2 5501 JOSH BIRMINGAHM PKWY CHARLOTTE NC 28208

# 3 3 4800 HANGAR ROAD CHARLOTTE NC 28208

# 4 4 5020 HANGAR ROAD CHARLOTTE NC 28208

# 5 5 4716 YORKMONT ROAD CHARLOTTE NC 28208

# 6 6 5501 JOSH BIRMINGHAM PKWY CHARLOTTE NC 28208

# 7 7 5000 HANGAR ROAD CHARLOTTE NC 28208

# 8 8 100 WEST TRADE STREET CHARLOTTE NC 28202

# 9 9 5501 CARNEGIE BLVD CHARLOTTE NC 28209

# 10 10 2200 REXFORD ROAD CHARLOTTE NC 28211

# # … with 81 more rows

Parsing Street, Numbers and Direction

We can use similar functions to parse out the street number.

warn_min <- warn_min %>%

pm_house_parse()

Directionality of the street is little of a challenge. North could mean direction or a street name North St. Postmastr has logic already built into it to distinguish these two cases. By default, postmastr uses dic_us_dir dictionary.

dic_us_dir

# # A tibble: 20 × 2

# dir.output dir.input

# <chr> <chr>

# 1 E E

# 2 E East

# 3 N N

# 4 N North

# 5 NE NE

# 6 NE Northeast

# 7 NE North East

# 8 NW NW

# 9 NW Northwest

# 10 NW North West

# 11 S S

# 12 S South

# 13 SE SE

# 14 SE Southeast

# 15 SE South East

# 16 SW SW

# 17 SW Southwest

# 18 SW South West

# 19 W W

# 20 W West

We have already converted our strings to upper cases rather than leaving it in the sentence case as dic_us_dir assumes. We will have to modify the dictionary to fit our useccase.

dic_us_dir <- dic_us_dir %>%

mutate(dir.input = str_to_upper(dir.input))

warn_min <- warn_min %>%

pm_streetDir_parse(dictionary = dic_us_dir) %>%

pm_streetSuf_parse() %>%

pm_street_parse(ordinal = TRUE, drop = TRUE)

Once we have parsed data, we add our parsed data back into the source.

warn_parsed <- pm_replace(warn_min, source = warn) %>%

pm_rebuild(output="short", keep_parsed = 'yes')

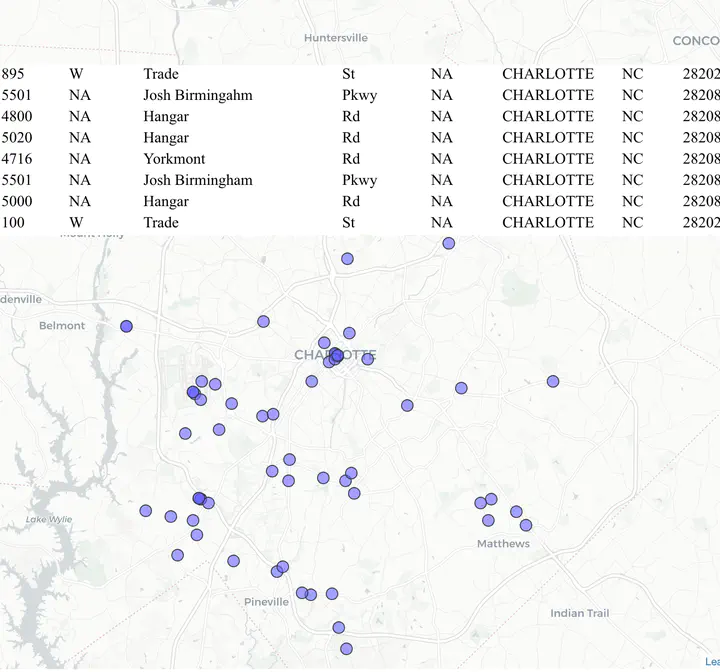

Now that it is straightforward to geocode the addresses using a census geocoder. You can quickly visualise using mapview.

library(censusxy)

warn_sf <- cxy_geocode(warn_parsed, street = "pm.address", city = "pm.city", state = "pm.state", zip = "pm.zip",

output = "full", class = "sf", parallel = 4) # You can only use parallel on non-Windows OS and must specify the number of cores that you want to employ for this exercise

library(tmap)

tmap_mode('view')

m1 <-

tm_shape(warn_sf)+

tm_symbols(col ='red') +

tm_basemap(leaflet::providers$Stamen.TonerHybrid)

library(widgetframe)

frameWidget(tmap_leaflet(m1))

Notice that only 75 of the unique 90 addresses are geocoded by the geocoder. Using other geocoders in combination might improve the hit rate.

Exercise

-

API calls are notoriously fickle. So this creates a replicability problem. However, you could also do this part locally. Download the master list of addresses for North Carolina and match the latitude and longitude with the parsed address fields in the two datasets.

-

Instead of

censusxygeocoder package, trytidygeocoder. See if you can improve the hitrate using multiple geocoding services.

Matching Names

Notice that in WARN data there are no NAICS codes. So it is hard to tell which industry is being affected by these layoffs. Fortunately, another dataset has names and NAICS codes. So just as we matched parsed addresses to latitude an longitude, we can try and match names to NAICS codes. However, names are more idiosyncratic than addresses.

Here are some rules, I came up using iterative methods. They are not exhaustive nor are they representative of what you might want to with other datasets.

# I am trying to remove common words in the name that can impact string similarity scores. Add to the list or modify to suit.

removalwords <- c("CORP", "CORPORATION", "SERVICE", "SERVICES", "CO", "GROUP", "HOLDINGS", "MANAGEMENT", "LTD", "INC", "LLC", "PC", "PLLC", "SOLUTIONS", "COMPANY", "COMPANIES", "MANAGEMENT", "MGMNT")

placenames <- tigris::places(state="NC", progress_bar = FALSE) %>% sf::st_set_geometry(NULL) %>% pull(NAME) %>% toupper()

compnames <- warn_parsed %>%

select(company, pm.zip) %>%

mutate(

orig_company = company,

company = str_squish(company), # Removes leading and trailing white spaces

company = str_remove_all(company, "\\&[A-Za-z]*;"), # This removes some HTML tags like " < etc.

company = str_remove_all(company, "COVID19"), # Removes a special tag

company = str_remove_all(company, "\\t|\\n|\\r"), # This removes newlines and some other returns

company = str_remove_all(company, "[[:punct:]]"), # This removes punctuations marks such as `,`, `'`, '?

company = str_replace_all(company, "\\.", "\ "), # This replaces . with a space.

company = str_replace(company, "\\D\\B\\A", "DBA"), #This replaces \D\B\A with DBA

company = str_replace_all(company, "\\<U\\+0082\\>", "E"), #This unicode character <U+0082> is usually meant to be an e in cafes.

company = str_to_upper(company), # Convert to upper case.

company = str_remove_all(company, paste0("\\b(",paste0(removalwords, collapse="|"), ")\\b")), # Remove all the words that are in the list above.

company = str_remove_all(company, paste0("\\b(",paste0(placenames, collapse="|"), ")\\b")), # Remove place names from the company names. This may not work out well if the company is called for e.g. Charlotte Plastics.

name1 = str_split(company, "DBA", n=2, simplify = T)[,1] %>% str_squish(), #The following two lines split the names of the company at DBA (Doing Business As).

name2 = str_split(company, "DBA", n=2, simplify = T)[,2] %>% str_squish()

)

We can make educated guesses about what the NAICS codes for the WARN dataset are going to by searching for some common substrings associated with the industry. For example, sector 72 is Accommodation and Food Services.

compnames <- compnames %>%

mutate(NAICS2 = case_when(

str_detect(company, "\\bMEDICAl\\b") ~ "62",

str_detect(company, '\\bAIRLINES\\b') ~ "48",

str_detect(company, '\\bRESTAURANT|RSTRNT\\b') ~ "72",

str_detect(company, '\\bCAFE|BAR\\b') ~ "72",

str_detect(company, "\\bFITNESS\\b") ~ "71",

TRUE ~ NA_character_

)

)

We still need to find out the NAICS code for 85 companies.

Fuzzy String Matching

For the rest of the dataset, we are going to use Reference USA dataset that has names and NAICS codes.

refUSA <- here("tutorials_datasets", "textmatching", "2019_Mecklenburg_RefUSA.csv") %>% read_csv() %>%

mutate(orig_company = company,

company = str_to_upper(company),

company = str_remove_all(company, "[[:punct:]]"),

company = str_replace_all(company, "\\.", "\ "),

company = str_remove_all(company, paste0("\\b(",paste0(removalwords, collapse="|"), ")\\b")),

company = str_squish(company)

) %>%

mutate(NAICS2 = str_sub(primary_naics, start=1, end=2)) %>%

filter(company != "")

Exercise

- Try matching (exact) on names from the WARN dataset to the RefUSA dataset. What is the match rate?

You will notice that the match rate is on exact match is not great because of various spellings and typos. Thus asking the question what is the row that matches the company name string in RefUSA dataset. So we instead ask a different question. How similar are two strings? To do that we have to understand the notion of distance between two strings. There are number of such distances. Here are some common ones.

- Levenshtein distance (lv) the minimum number of single-character edits (insertions, deletions or substitutions) required to change one word into the other

- Damerau–Levenshtein (dl) distance is the minimum number of operations (consisting of insertions, deletions or substitutions of a single character, or transposition of two adjacent characters) required to change one word into the other.

- Optimal String Alignment (osa) uses Damerau–Levenshtein distance but each substring may only be edited once.

- Hamming distance (hamming) is the number of positions with same symbol/character in both strings of equal length.

- Longest common substring distance (lcs) is the minimum number of symbols/characters that need to be removed to make the two strings the same.

- Jaro-winker distance (jw) is a distance that is derived from the lengths of two strings, number of shared symbols an their transpositions.

- soundex is distance between two strings when they are translated to phonetic code.

These are by no means exhaustive. They can even be improved upon (e.g. weighting spelling errors based on keyboard layouts.)

In the following code, I am going to use a bunch of different string distance algorithms, find the most similar string based on each algorithm and pick the string that is selected by many different algorithms.

library(stringdist)

distance_methods<-c('osa', 'dl','lcs','jw', 'soundex')

# While loops are terrible in R it is easy to program them. Better for readability.

for (i in 1:dim(compnames)[1]){

# Only focus on the subset of data that we did not already hard code the NAICS

if(is.na(compnames$NAICS2[i])){

company <- compnames[i,]

name1 <- company$name1

name2 <- company$name2

zcta <- company$pm.zip

# Create a target dataset.

refusa_select <- refUSA %>% filter(zip_code == zcta)

# The following creates a vector of potential candidates (best match based on different algorithms)

# In the following, I am using similarity score of 0.75 or higher. Adjust this for tighter or looser matches.

closestmatch <-

map_int(distance_methods, # for each distance method

possibly(

function(x){

name1sim <- stringsim(name1, refusa_select$company, method= x) # Create a string similarity between name1 and refusa name

name2sim <- stringsim(name2, refusa_select$company, method=x) # Create a string similarity between name2 and refusa name

# Apply a threshold

name1sim <- ifelse(name1sim<0.75, NA, name1sim)

name2sim <- ifelse(name2sim<0.75, NA, name2sim)

# Between name1sim an name2sim, pick the one with the highest score.

k <- pmax(name1sim, name2sim, na.rm=T) %>% which.max()

return(ifelse(length(k)!=0,k,NA_integer_))

},

NA_integer_

)

) %>%

.[!is.na(.)]

# Of the distance methods, only select the matches that pass the threshold and pick the NAICS code that has the highest frequency. Note that this code does not break ties.i.e. there may be multiple NAICS that potentially picked by multiple algorithms, but this code picks the one that is at the top (possibly ordered)

compnames$NAICS2[i]<-

ifelse(length(closestmatch)>0,

refusa_select[closestmatch,] %>%

group_by(NAICS2) %>%

summarize(no = n()) %>%

slice_max(no, n=1, with_ties = FALSE) %>% select(NAICS2) %>% unlist() %>% unname(),

NA_character_

)

rm(name1,name2,zcta,closestmatch)

}

}

Of the 111 we are able to determine the NAICS codes for 87 companies, a 78.38 % hit rate. This seems reasonable, for short amount of work.

Exercise

- Use a different set string distance algorithms, esp ones based on n-grams.

- Instead of selecting strings with maximum similarity, select top 5 similar strings and then pick the string that appears in most of these algorithms.

- What other preprocessing can be done to improve the hit rate?

- Spot check to see if the hits are accurate?

Conclusions

As you noticed, a lot of text/string manipulation relies on understanding the linguistic conventions and is an iterative processes. What works in one context may not work in another and you should be prepared to adapt. You will encounter edge cases much more frequently in this type of work, than other kinds of data munging operations. Nonetheless, it is a useful skill to have. In particular, it is useful get familiar with using Regular Expressions to search for patterns within strings. However, use it judiciously.

Additional Resources

- Silge, Julia, and David Robinson. 2020. Text Mining with R. Sebastapol, CA: O’ Reilly. https://www.tidytextmining.com/.